13. Perceptual Decision Making (Wong & Wang)¶

In this exercise we study decision making in a network of competing populations of spiking neurons. The network has been proposed by Wong and Wang in 2006 [1] as a model of decision making in a visual motion detection task. The decision making task and the network are described in the book and in the original publication (see References [1]).

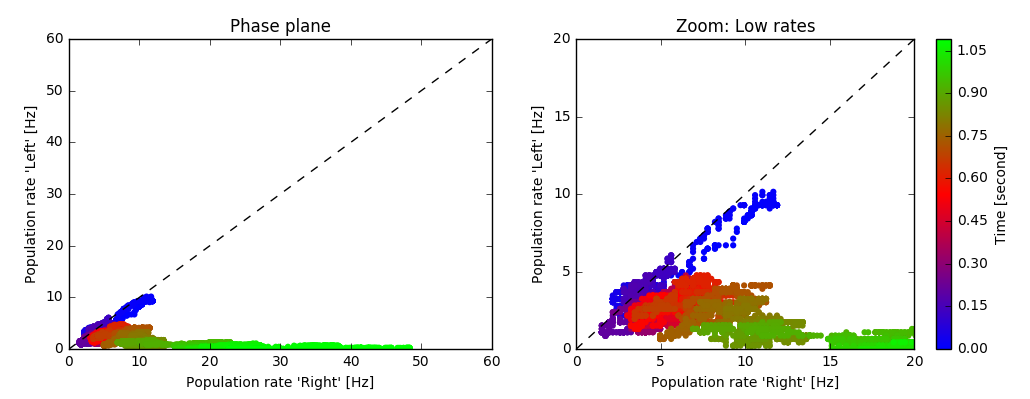

Decision Space. Each point represents the firing rates of the two subpopulations “Left” and “Right” at a given point in time (averaged over a short time window). The color encodes time. In this example, the decision “Right” is made after about 900 milliseconds.

To get a better understanding of the network dynamics, we recommend to solve the exercise Spatial Working Memory (Compte et. al.).

The parameters of our implementation differ from the original paper. In particular, the default network simulates only 480 spiking neurons which leads to relatively short simulation time even on less powerful computers.

Book chapters

Read the introduction of chapter 16, Competing populations and decision making. To understand the mechanism of decision making in a network, read 16.2, Competition through common inhibition.

If you have access to a scientific library, you may also want to read the original publication, References [1].

Python classes

The module competing_populations.decision_making implements the network adapted from References [1, 2]. To get started, call the function competing_populations.decision_making.getting_started() or copy the following code into a Jupyter notebook.

%matplotlib inline

from neurodynex.competing_populations import decision_making

decision_making.getting_started()

13.1. Exercise: The network implementation¶

Before we can analyse the decision making process and the simulation results, we first need to understand the structure of the network and how we can access the state variables of the respective subpopulations.

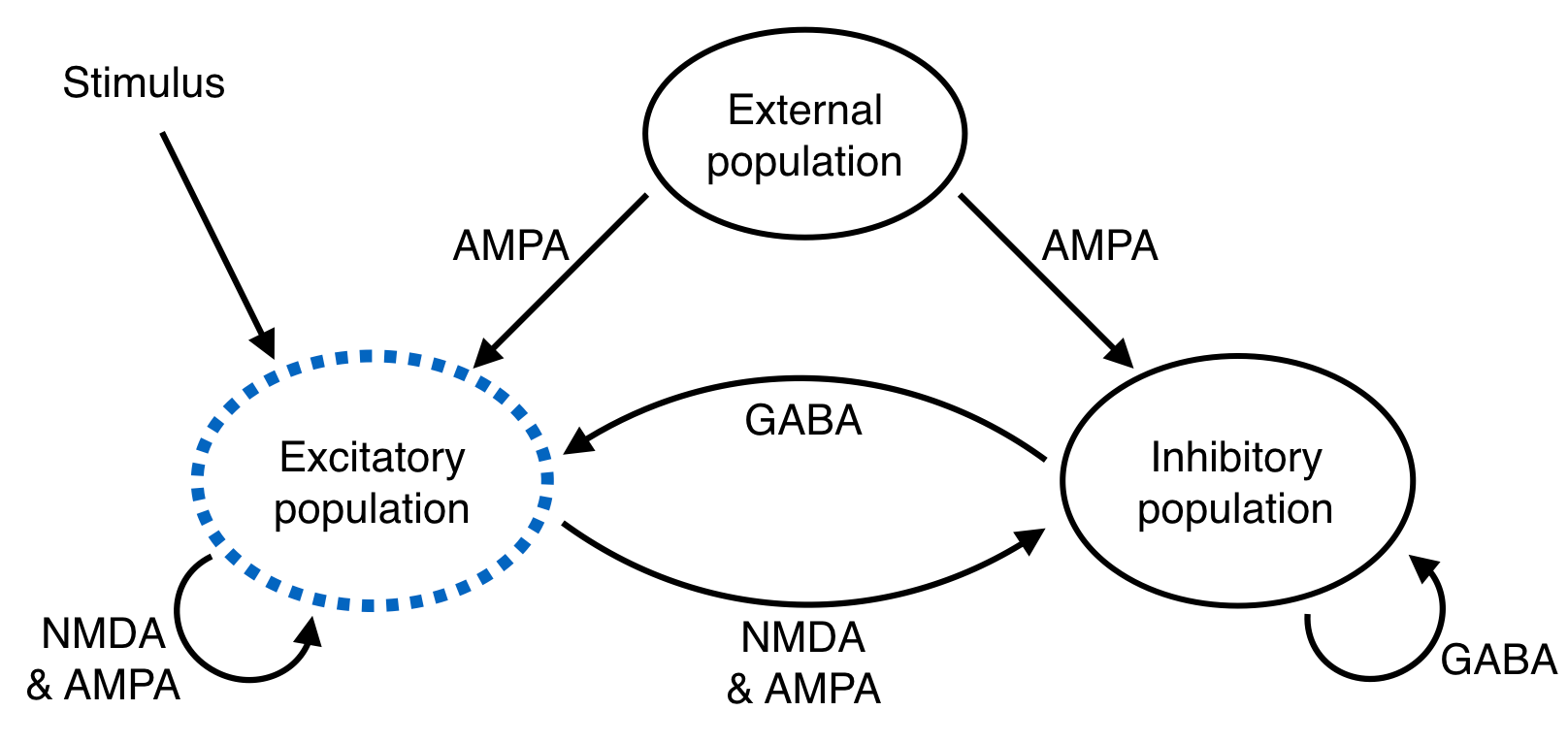

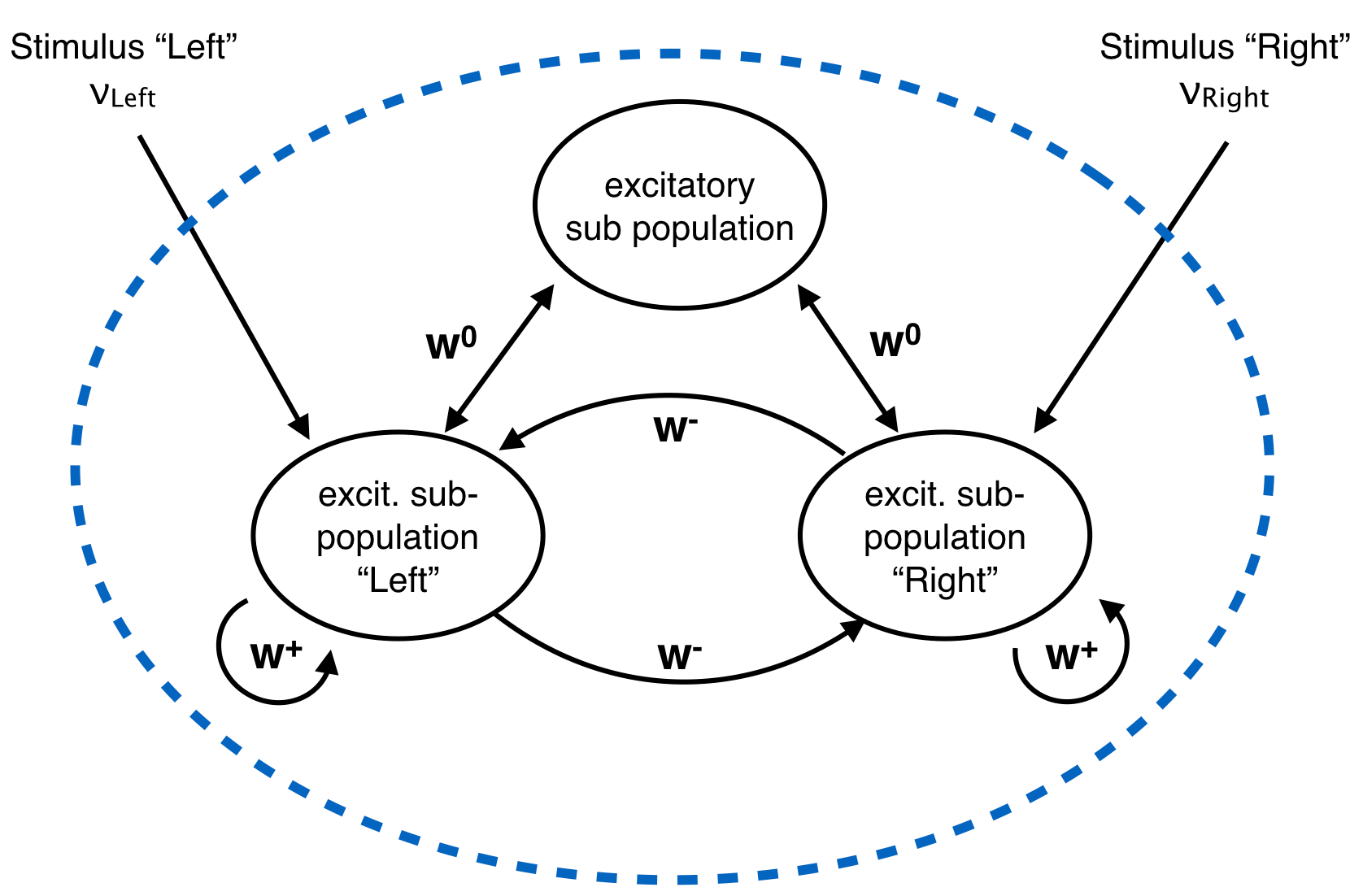

Network structure. The excitatory population is divided into three subpopulations, shown in the next figure.

Structure within the excitatory population. The “Left” and “Right” subpopulations have strong recurrent weights \((w^+ > w^0)\) and weak projections to the other \((w^- < w^0)\). All neurons receive a poisson input from an external source. Additionally, the neurons in the “Left” subpopulation receive poisson input with some rate \(\nu_{Left}\); the “Right” subpopulation receives a poisson input with a different rate \(\nu_{right}\).

13.1.1. Question: Understanding Brian2 Monitors¶

The network shown in the figure above is implemented in Brian2 in the function competing_populations.decision_making.sim_decision_making_network(). Each subpopulation is a Brian2 NeuronGroup. Look at the source code of the function sim_decision_making_network() to answer the following questions:

- For each of the four subpopulations, find the variable name of the corresponding NeuronGroup.

- Each NeuronGroup is monitored with a PopulationRateMonitor, a SpikeMonitor, and a StateMonitor. Find the variable names for those monitors. Have a look at the Brian2 documentation if you are not familiar with the concept of monitors.

- Which state variable of the neurons is recorded by the StateMonitor?

13.1.2. Question: Accessing a dictionary to plot the population rates¶

The monitors are returned in a Python dictionary providing access to objects by name. Read the Python documentation and look at the code block below or the function competing_populations.decision_making.getting_started() to learn how dictionaries are used.

- Extend the following code block to include plots for all four subpopulations.

- Run the simulation for 800ms. What are the “typical” population rates of the four populations towards the end of the simulation? (In case the network did not decide, run the simulation again).

- Without running the simulation again, but by using the same

resultsdictionary, plot the rates using different values foravg_window_width. - Interpret the effect of a very short and a very long averaging window.

- Find a value

avg_window_widthfor which the population activity plot gives meaningful rates.

import brian2 as b2 from neurodynex.tools import plot_tools from neurodynex.competing_populations import decision_making import matplotlib.pyplot as plt results = decision_making.sim_decision_making_network(t_stimulus_start= 50. * b2.ms, coherence_level=-0.6, max_sim_time=1000. * b2.ms) plot_tools.plot_network_activity(results["rate_monitor_A"], results["spike_monitor_A"], results["voltage_monitor_A"], t_min=0. * b2.ms, avg_window_width=2. * b2.ms, sup_title="Left") plot_tools.plot_network_activity(results["rate_monitor_B"], results["spike_monitor_B"], results["voltage_monitor_B"], t_min=0. * b2.ms, avg_window_width=2. * b2.ms, sup_title="Right") plt.show()

Remark: The parameter avg_window_width is passed to the function PopulationRateMonitor.smooth_rate() . This function is useful to solve one of the next exercises.

avg_window_width = 123*b2.ms

sr = results["rate_monitor_A"].smooth_rate(window="flat", width=avg_window_width)/b2.Hz

13.2. Exercise: Stimulating the decision making circuit¶

The input stimulus is implemented by two inhomogenous Poisson processes: The subpopulation “Left” and “Right” receive input from two different PoissonGroups (see Figure “Network Structure”). The input has a coherence level c and is noisy. We have implemented this in the following way: every 30ms, the firing rates \(\nu_{left}\) and \(\nu_{right}\) of each of the two PoissonGroups are drawn from a normal distribution:

The coherence level c, the maximum mean \(\mu_0\) and the standard deviation \(\sigma\) are parameters of sim_decision_making_network().

13.2.1. Question: Coherence Level¶

- From the equation above, express the difference \(\mu_{left}-\mu_{right}\) in terms of \(\mu_0\) and \(c\).

- Find the distribution of the difference \(\nu_{left}-\nu_{right}\). Hint: the difference of two Gaussian distributions is another Gaussian distribution.

Now look at the documentation of the function sim_decision_making_network() and find the default values of \(\mu_0\) and \(\sigma\). Using those values, answer the following questions:

- What are the mean firing rates (in Hz) \(\mu_{left}\) and \(\mu_{right}\) for the coherence level c= -0.2?

- For c= -0.2, how does the difference \(\mu_{left}-\mu_{right}\) compare to the variance of \(\nu_{left}-\nu_{right}\).

13.2.2. Question: Input stimuli with different coherence levels¶

Run a few simulations with c=-0.1 and c=+0.6. Plot the network activity.

- Does the network always make the correct decision?

- Look at the population rates and estimate how long it takes the network to make a decision.

13.3. Exercise: Decision Space¶

We can visualize the dynamics of the decision making process by plotting the activities of the two subpopulations “Left” / “Right” in a phase plane (see figure at the top of this page). Such a phase plane of competing states is also known as the Decision Space. A discussion of the decision making process in the decision space is out of the scope of this exercise but we refer to References [1].

13.3.1. Question: Plotting the Decision Space¶

- Write a function that takes two RateMonitors and plots the Decision Space.

- Add a parameter

avg_window_widthto your function (same semantics as in the exercise above). Run a few simulations and plot the phase plane for different values ofavg_window_width. - We can use a rate threshold as a decision criterion: We say the network has made a decision if one of the (smoothed) rates crosses a threshold. What are appropriate values for

avg_window_widthandrate thresholdto detect a decision from the two rates?

Hint: Use Brian’s smooth_rate function:

avg_window_width = 123*b2.ms

sr = results["rate_monitor_A"].smooth_rate(window="flat", width=avg_window_width)/b2.Hz

13.3.2. Question: Implementing a decision criterion¶

- Using your insights from the previous questions, implement a function get_decision_time that takes two RateMonitors , a

avg_window_widthand arate_threshold. The function should return a tuple (decision_time_Left, decision_time_right). The decision time is the time index when some decision boundary is crossed. Possible return values are (1234.5ms, 0ms) for decision “Left”, (0ms, 987.6ms) for decision “Right” and (0ms, 0ms) for the case when no decision is made within the simulation time. A return value like (123ms, 456ms) is an error and occurs if your function is called with inappropriate values foravg_window_widthandrate_threshold.

The following code block shows how your function is called.

>> get_decision_time(results["rate_monitor_A"], results["rate_monitor_B"], avg_window_width=123*b2.ms, rate_threshold=45.6*b2.Hz)

>> (0.543 * second, 0. * second)

The following code fragments could be useful:

smoothed_rates_A = rate_monitor_A.smooth_rate(window="flat", width=avg_window_width) / b2.Hz

idx_A = numpy.argmax(smoothed_rates_A > rate_threshold/b2.Hz)

t_A = idx_A * b2.defaultclock.dt

Run a few simulations to test your function.

13.4. Exercise: Percent-correct and Decision-time as a function of coherence level¶

We now investigate how the coherence level influences the decision making process. In order to estimate quantities like Percent-correct or Decision-time, we have to run multiple repetitions and then average. Use the function competing_populations.decision_making.run_multiple_simulations() to get the values for multiple runs. Pass your function get_decision_time to run_multiple_simulations() as shown here:

coherence_levels = [-0.1, -0.5]

nr_repetitions = 3

time_to_A, time_to_B, count_A, count_B, count_No = decision_making.run_multiple_simulations(get_decision_time,coherence_levels, nr_repetitions, max_sim_time=??, rate_threshold=??, avg_window_width=??)

The return value time_to_A is a matrix of size [nr_of_c_levels x nr_of_repetitions]. count_A is the number of times the network decides for A (= “Left” by convention). The other values are analogous. count_No is the number of runs where the network has not made a decision within the simulation time.

Check the documentation of run_multiple_simulations() and set the parameters according to the findings in previous questions.

13.4.1. Question: Percent-Correct, Time-to-decision¶

Using run_multiple_simulations(), run at least 15 simulations for each of the two coherence_levels = [+0.15, +0.8] and visualize the results. For each of the questions, ignore the simulations with “no decision”. Optionally, if you have sufficient time/computing-power, you could run more repetitions and more levels.

try a larger network.

- Visualize

Percent correctversuscoherence level. - Visualize

Time to decisionversuscoherence level. - Discuss your results.

import brian2 as b2

from neurodynex.competing_populations import decision_making

coherence_levels = [0.15, 0.8]

nr_repetitions = 15

# do not set other parameters (=defaults are used).

time_to_A, time_to_B, count_A, count_B, count_No = decision_making.run_multiple_simulations(get_decision_time, coherence_levels, nr_repetitions)

# you may want to wrap the visualization into a function

# plot_simulation_stats(coherence_levels, time_to_A, time_to_B, count_A, count_B, count_No)

13.5. References¶

[1] Wong, K.-F. & Wang, X.-J. A Recurrent Network Mechanism of Time Integration in Perceptual Decisions. J. Neurosci. 26, 1314–1328 (2006).

[2] Parts of this exercise and parts of the implementation are inspired by material from Stanford University, BIOE 332: Large-Scale Neural Modeling, Kwabena Boahen & Tatiana Engel, 2013, online available.